Technology Insights

How to Adopt AI Development at Scale: 6 Lessons from AppDirect’s Leap from 0% to Almost All AI-Generated Code

By Mathew Spolin / February 2, 2026

In this article:

In this insightful article re-shared from LinkedIn, Mathew Spolin, AppDirect's SVP of Engineering, shares how AppDirect transformed its software development by adopting AI-assisted tools at scale, going from zero to almost enitrely AI-assisted code in just one year. Readers will gain valuable lessons on the organizational changes, metrics, and mindset shifts necessary to successfully integrate AI into engineering workflows and dramatically accelerate delivery without compromising quality.

One Year of AI-Assisted Development at Scale

In December 2025, almost all of the pull requests merged by our engineering team were AI-assisted. AI-generated lines of code were the majority of everything we committed.

Two years earlier, that number was zero.

This is the story of how a global engineering organization with hundreds of engineers adopted AI development tools—not as a pilot, not as an experiment, but as the way we build software. It's also a story about what changes when you actually do this at scale.

The Wood racquet problem

Here's an analogy I use with my team.

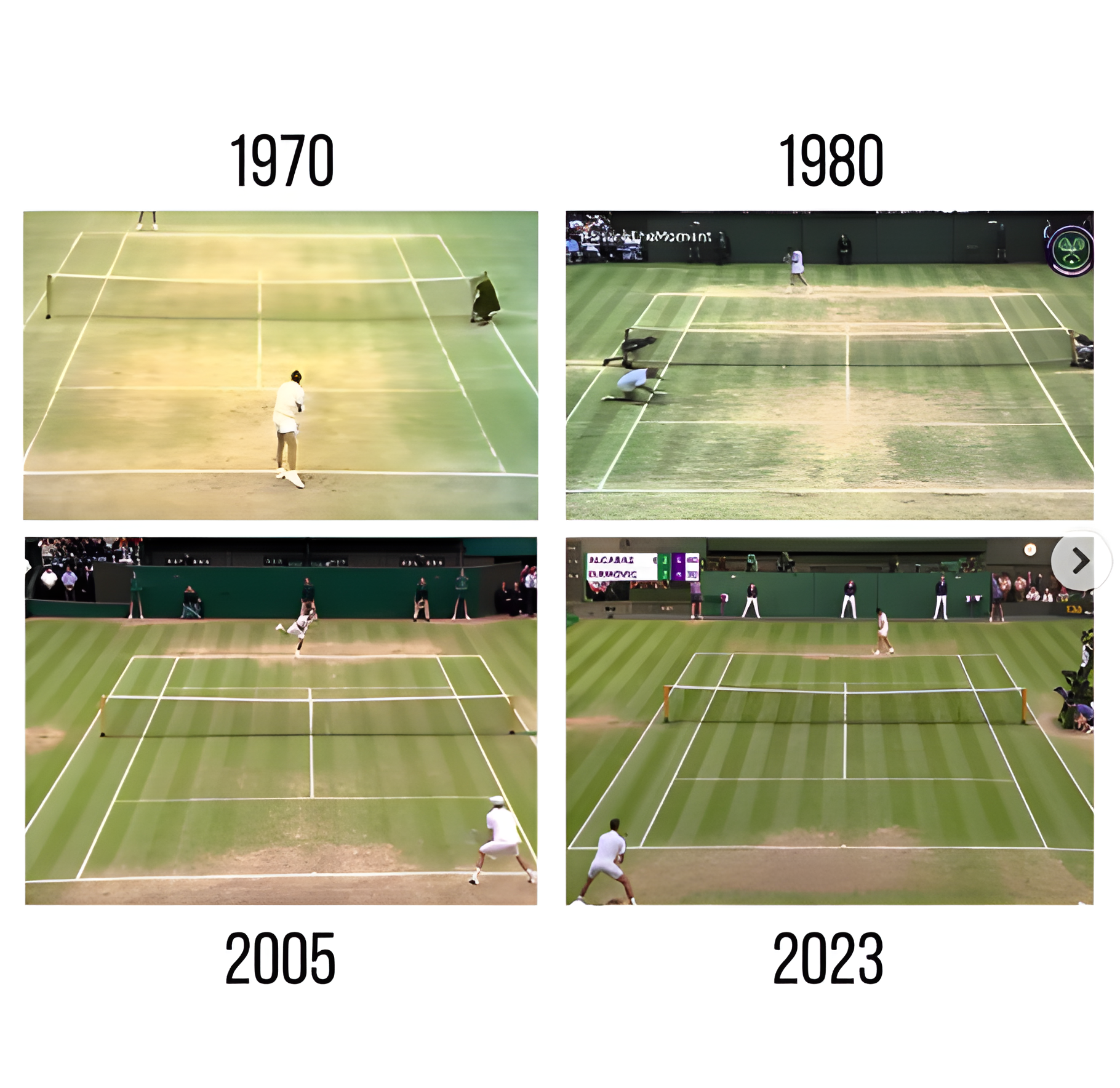

If you watch professional tennis today, the athletes are superhuman. They stand two meters behind the baseline and trade forehand missiles at 100+ mph. The game has evolved because the equipment evolved. Players train better, and use modern racquets, strings, techniques.

The following images show the courts at the Wimbledon finals in 1970, 1980, 2005, and 2023, showing different wear patterns in the grass as play style has evolved.

You don't have to play like that. You could use a wood racquet. But you won't win at Wimbledon.

Software development is running the same reel, just at 1000x speed.

Early days, you wrote every line, waited for nightly builds, hoped it shipped. Then cloud CI/CD and open-source arrived; teams that automated pulled ahead. Now, AI tools draft code, write tests, and refactor on cue. Developers who start with an AI prompt ship at superhuman speed.

Those who don't are playing serve-and-volley on a slow court.

If your competitors are shipping twice as fast with AI agents, sticking to a wood-racquet workflow is a strategic mistake.

Starting slow: The legal and security hurdles

We didn't jump in immediately. In 2023, when tools like GitHub Copilot were gaining traction, I wanted to roll them out across the organization. But we couldn't.

At the time, there was an ongoing lawsuit in the news involving Copilot. There were legitimate concerns about intellectual property, because these tools send your code outside your network to foundation model providers. In our assessment at the time, this was risky. We couldn't risk having our code used for training.

So we proposed a cautious rollout: start with a pilot in one team, assess the risks, expand carefully. GitHub Copilot seemed like the safest bet since GitHub already had our code.

By January 2024, we had Copilot available organization-wide. It was helpful, providing autocomplete on steroids. Developers accepted suggestions about a third of the time. We saw around 60,000 completions generating about 80,000 lines of code that year.

It was a start. It felt like an improvement. But it wasn't transformational.

The switch to agentic development

In early 2025, we made a decision: standardize on a single tool as our AI IDE.

Why pick one tool? There are dozens of AI development tools, with more launching every week. We evaluated many of them. But there are real costs to proliferation:

Security and privacy—Every tool sends code to external servers. Every tool carries IP risk. There's a vetting process, and it's not free. At scale, there's an opportunity cost: time, legal review, procurement.

Adoption—When everyone uses different tools, you can't share best practices. You can't build organizational muscle memory. You can't measure what's working.

Cost—These tools aren't cheap at scale. We're not going to vet and pay for every new thing that launches.

We standardized on a single AI-native IDE that met our security, privacy, and workflow needs at the time, and went all in.

By the end of February 2025, we started the migration. By March, adoption was organization-wide.

Building the metrics

I know you've heard this before: When it comes to organizational change, if you can't measure it, you can't manage it.

We already had engineering metrics that we tracked around delivery predictability, quality scores, cycle time. But AI adoption required new instrumentation.

Accepted lines—This measures how many lines of code a tool suggests that a developer accepts. It doesn't mean they check it in, since they might revise or delete it later. But it's a proxy for how much the tool is helping. We calculate a run rate: the annualized number of expected lines based on current usage. This lets us compare tools and track adoption over time.

AI-assisted PRs—What percentage of merged pull requests used an AI tool to generate some or all of the code? Lines accepted vs. lines committed: Are developers actually using what the AI suggests? Or are they accepting suggestions and then deleting them?

Impact—This is a metric we've tracked for years. It measures the marginal change to our codebase: not just lines added, but deletions, insertion points, complexity changes. It excludes code that's merged all at once, such as from acquisitions. For years, this metric never went above our target. People actually complained to me that the target was impossible. By May of 2025, when adoption hit scale, we roughly doubled our target.

The numbers

Here's what we observed.

At peak adoption in March 2024, Copilot was generating about 250,000 accepted lines annually across our team. That was after 14 months of adoption.

To compare, using the AI IDE we adopted, looking at a 7-day window in the spring, was at 3 million accepted lines annualized. That's 12x more. By the end of the year, this metric was over 11 million. That's 44x more (4,400%).

This isn't a marginal improvement. It's a different category of tool. You don't see 44x improvements very often in business.

By December 2025:

Over 90% of PRs were AI-assisted

The vast majority of merged lines were AI-generated

What actually changed

The numbers are impressive, but what matters is what changes in practice.

Speed increased—Developers who start with an AI prompt ship faster. The cycle time from idea to merged code compressed. Our "Impact" metric, measuring the marginal change to the codebase, jumped materially in the first two months.

Quality held or improved—This was the concern: would AI-generated code be slop? Would we be shipping bugs faster? We tracked this. Customer incidents escalated to engineering dropped materially year-over-year. Our quality score measures security, reliability, performance, responsiveness, and defect detection. This measurement went up significantly, even as we scaled the business.

Onboarding got faster—Engineers told us in surveys that the AI IDE helps them navigate unfamiliar parts of the system. When you're new to a codebase, AI that understands the context is like having a senior engineer available 24/7.

The work changed—Software development used to mean typing symbols and syntax on a keyboard. Tabs or spaces? What editor? Those debates feel quaint now. The craft is shifting toward architecture, assumptions, and product thinking.

What we had to change

Adoption at scale forces process evolution

You can't just hand developers a new tool and expect everything else to stay the same.

Requirements had to improve

IIf a developer can get five or ten times as much done with agentic development in an AI IDE, they need more stories, more designs. Our PMs and designers had to keep up. We moved from vague scopes and slides toward more complete requirements documents. Instead of flat designs, epics came with functional prototypes so issues usually found during implementation got worked out earlier.

Iteration cycles shortened

Two-week sprints feel too long when code ships in hours. Some teams moved to 1-3 day cycles to tighten feedback loops.

Guardrails became essential

We can't have engineers using free trials of random AI tools with our code and data. We don't want confidential information used in training. All the best models are available through our approved tools, so we need to make sure people use those.

Documentation became more important, not less. Here's a counterintuitive one: AI tools consume documentation. If the context isn't written down, the AI can't use it. Our developer experience survey showed documentation as a major pain point, and one consequence is that it weakens our AI tools. Technical documentation needs to be treated like a product.

The mindset shift

I think we're in the middle of a science-fiction-level advance, and as strange as it sounds to people who are perpetually online, not enough people realize it yet.

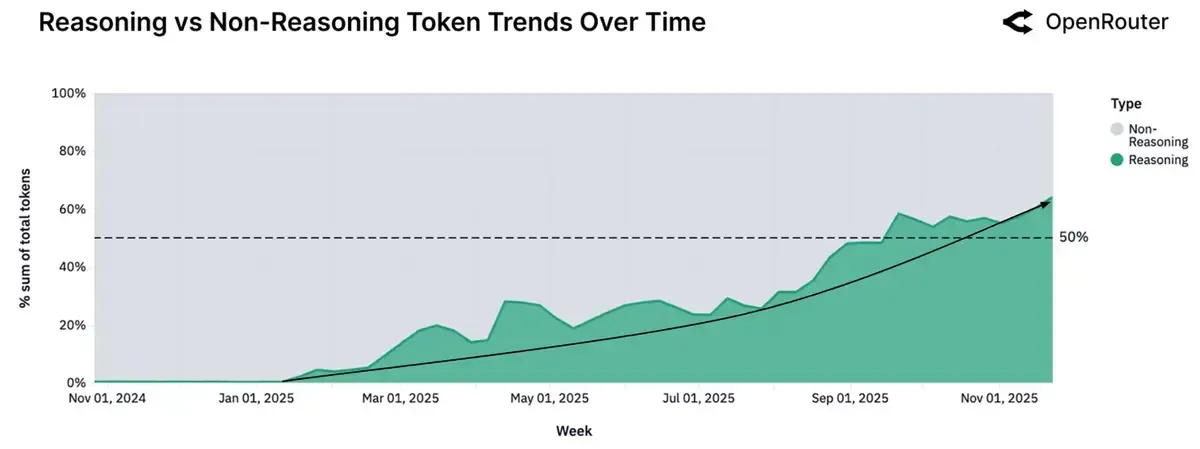

Consider that more than half of all token usage through OpenRouter is now thinking or reasoning tokens. Twelve months ago, there were no public models that used reasoning. This is moving incredibly fast.

Reasoning Models Now Represent Half of All Usage, versus zero usage one year before

Consider self-driving cars. A lot of people still think of them as a tech moonshot. Tourists in San Francisco still take photos and videos of Waymos, navigating along with no human in the driver seat. Such a novelty! But I've ridden literally thousands of miles in them. There's something that happens when you realize you can get from A to B that way. It stops being theoretical.

The latest coding models from Anthropic, OpenAI, and Google provide that same experience. The ability to get from A to B with AI driving. Not everyone fully realizes this yet. But the teams that do are building differently.

In 2025, we asked developers to change their professional practices and use an AI IDE. Our direction for 2026 is even more clear: agentic development will be a core skill for the engineers on our team.

6 Lessons for other organizations

If you're thinking about doing this at scale, here's what I'd suggest:

Pick one tool and commit. The proliferation of options is a trap. Security review, procurement, training—these have real costs. Pick the best tool, standardize, and build organizational capability.

Measure relentlessly. Build dashboards that track adoption: accepted lines, AI-assisted PRs, code quality metrics. What gets measured gets managed. Put these metrics in your leadership reviews.

Start with the willing. Your early adopters will show what's possible. Let them experiment, share their wins, and pull others along. Forced adoption creates resentment; demonstrated value creates demand.

Expect process change. AI-assisted development is faster, which means your bottlenecks shift. Requirements, design, review, testing—these all need to evolve. Don't just add AI to a 2024 process.

Maintain guardrails. Security and IP concerns are real. Don't let engineers use random tools with company code. Vet your tools, negotiate appropriate terms, and enforce usage policies.

Invest in documentation. AI tools are only as good as the context they have. If your docs are out of date, incomplete, or missing, you're handicapping your AI investment.

Where this goes

By my estimate, within this year, PRs won't just be assisted by AI. Instead they'll be completely handled by AI, with human planning and oversight. In a few organizations already, things work this way. The ratio of human-written to AI-generated code will continue to shift.

This doesn't mean engineers become obsolete. It means the job changes. More architecture, more product thinking, more judgment about what to build. Less typing. Less syntax. Less boilerplate.

The organizations that adapt will ship faster, with higher quality, at lower cost. The ones that don't will be playing with wood racquets while everyone else has moved on.

We went from 0% to over 90% in about a year. The hard part wasn't the technology. It was the organizational change. The metrics. The process evolution. The mindset shift.

But if you do it right, you don't just get faster development. You get a fundamentally different way of building software.

Mathew Spolin leads global engineering at AppDirect, where his team has been tracking and optimizing AI adoption across a large distributed team. You can read Mathew's original LinkedIn article here.

Related Articles

Technology Insights

3 Reasons Why SaaS Platforms Are Evolving into Ecosystem-led Marketplaces

Explore the 3 key reasons SaaS platforms are evolving into ecosystem-led marketplaces in 2026. Discover how AI-powered strategies can help you simplify complexity, drive growth, and stay ahead in a rapidly changing software landscape.By Rebecca Muhlenkort / AppDirect / January 21, 2026

Industry Insights

Charting the AI Cycle of Innovation—How to Navigate the Disruption and Come Out on Top

The fastest wave of innovation in history is hitting faster than ever—10 times quicker than the first two industrial revolutions combined—and with it comes disruption and unprecedented opportunity. Learn how you can apply lessons from history to transform AI challenges into long-term competitive advantage.By Denise Sarazin / AppDirect / December 17, 2025

Strategy & Best Practices

How to Build Purpose-Built AI Agents That Actually Solve Business Problems

Learn why purpose-built AI agents—not generic tools—solve real business challenges. Discover best practices for secure, effective AI agent development, and how to avoid shadow IT risks. Join the conversation at Thrive and see agentic AI in action.By Rebecca Muhlenkort / AppDirect / June 11, 2025